Prompt Engineering as a Capability: Skills, Teams & Governance

TL;DR:

Prompt engineering is evolving from individual experimentation to an organizational capability.

Companies must define roles, training pathways, and governance to scale prompt-skills responsibly.

Success depends on structured collaboration between business users, AI specialists, and compliance teams.

The new focus: from “writing good prompts” to “designing prompt-systems that learn, adapt, and govern themselves.”

The Bridge Between Automation and Understanding

In my work on the AI Leadership Paradox, I argued that the more sophisticated AI systems become, the more crucial human judgment becomes. Prompt engineering is where this principle plays out in practice, it’s the bridge between automation and understanding. It’s how organizations keep humans in the loop, not by oversight alone, but by designing the language layer that guides machine reasoning.

The Emerging Discipline

Prompt engineering started as an art, a mix of intuition, experimentation, and online tips on “how to talk to ChatGPT.” But what began as individual craft is fast becoming a strategic capability. Organizations are realizing that the difference between a clever experiment and a repeatable process lies in structure, in how prompts are designed, shared, versioned, and governed across teams.

Just as DevOps turned coding into a managed discipline, and design systems made brand consistency scalable, PromptOps is emerging as the next layer of organizational maturity in AI. Platforms like Lakera Prompt Guard or Anthropic’s Claude Skills already point the way by turning prompts into structured, callable assets - units of reusable intelligence that can be invoked, audited, and improved over time.

Yet technology is only part of the story. The real question for leaders is how to organize and scale human capability around it. That’s where the organizational design challenge begins.

What Roles Need What Competencies

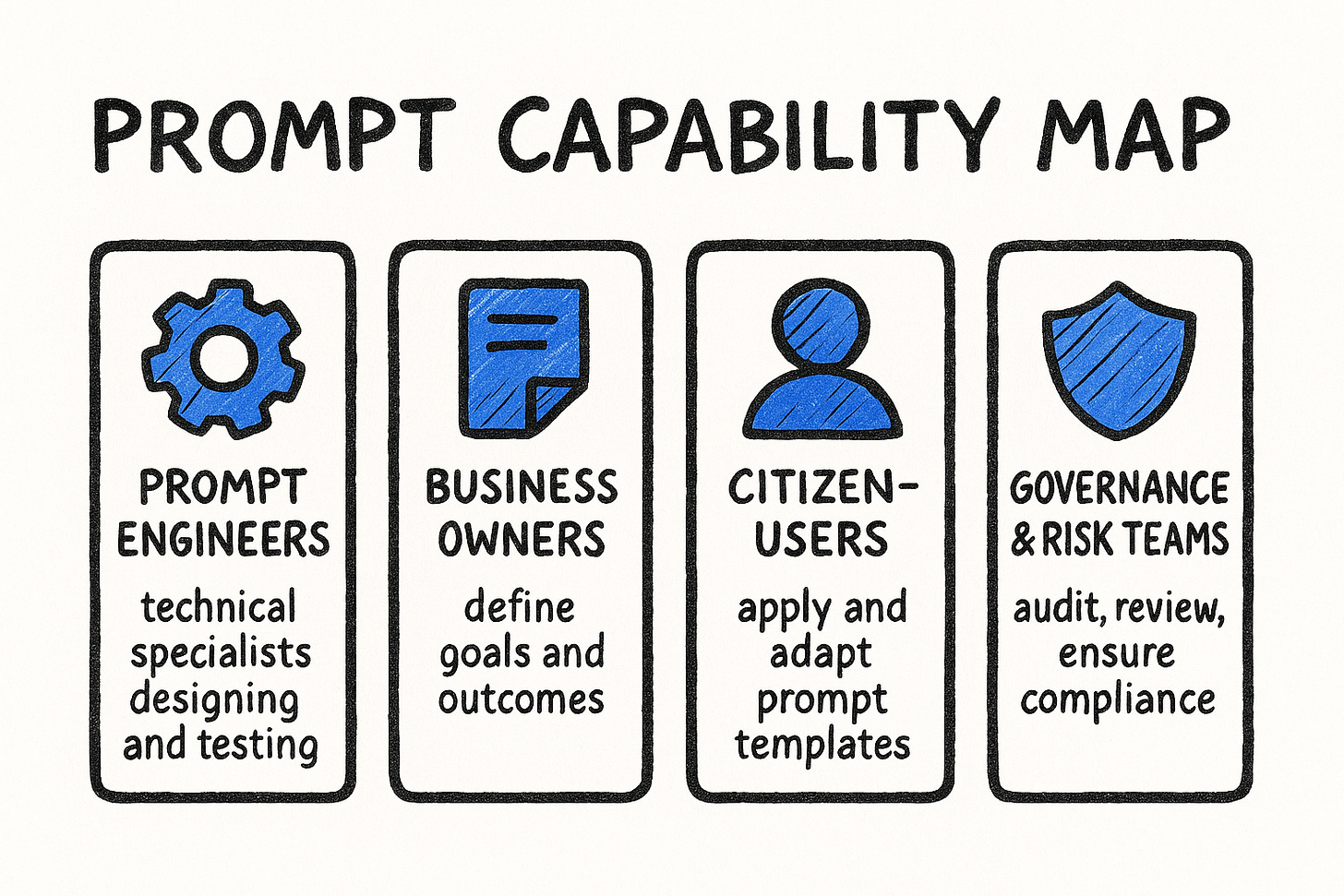

Building a prompt-capable organization means aligning four types of roles, each with distinct but complementary skills.

Prompt engineers are the technical specialists who understand how large language models behave under different configurations - temperature settings, context windows, chain-of-thought scaffolding. They design and maintain prompt templates, measure their accuracy, and version them like code. These are the people who know why changing a single word can shift the entire output and how to prevent that from causing problems.

Business-domain owners translate operational expertise into prompt requirements. They know the processes, the regulatory constraints, and the decisions AI needs to support. Their role is to ensure prompts produce outcomes that are useful, not just impressive. They’re the bridge between what the AI can do and what the business actually needs.

Citizen-users and knowledge workers form the broad middle layer. They don’t need to master LLM internals, but they should learn to use pre-approved templates, adapt tone and context, and know when to escalate to specialists. This is where scale happens, when hundreds or thousands of employees can safely and effectively use AI tools in their daily work.

Finally, governance and risk teams keep the system accountable. They review prompt libraries, track changes, and monitor for bias or drift. They don’t just manage compliance, they shape the guardrails that allow creativity to thrive safely. As the Human Technology Institute notes in its 2024 snapshot “People, Skills and Culture for Effective AI Governance,” this skills matrix reflects a broader truth: AI readiness isn’t about tools, it’s about culture.

Training Non-Tech Staff in Prompt Skills

Training non-technical staff is now one of the fastest returns on AI investment. When employees learn to “speak AI” clearly, both productivity and trust accelerate. The barrier to value creation drops dramatically when people understand how to frame their requests effectively.

The most effective programs blend short, contextual learning with hands-on experimentation. Instead of week-long courses, offer micro-learning moments, five to ten minutes on clarity, context, and examples. Then complement them with practical labs where participants refine prompts and see how small adjustments shift outputs. This approach respects people’s time while building real competence.

Some organizations are introducing internal prompt marketplaces - shared repositories where employees post “prompt recipes,” tag them by use case, and comment on improvements. It’s a simple idea, but it creates a living system of knowledge, a collective memory that evolves with each project. When someone solves a tricky prompt challenge, that solution becomes available to everyone.

IBM’s Enterprise Guide to AI Governance (2024) calls this “operational literacy”, the bridge between responsible AI principles and everyday practice. It’s how governance becomes embedded rather than imposed, how good practices spread organically through the organization.

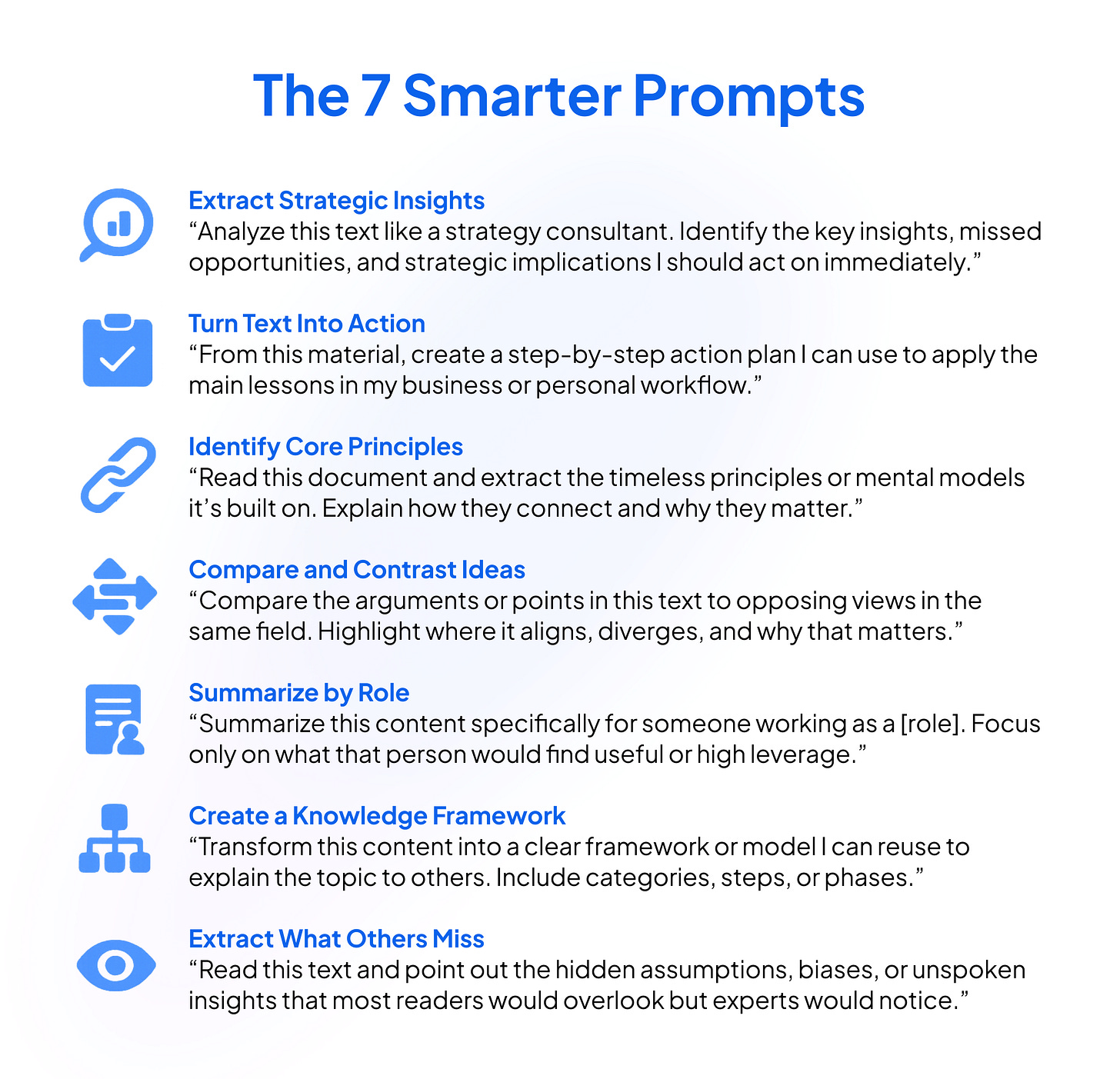

Seven Prompt Patterns that Scale Across Roles

These reusable prompts turn one-off experiments into teachable, auditable workflows. Save them in your prompt library, tag by use case, and version as they evolve.

Embedding Prompt Workflows Into the Organization

Once the skills are in place, the challenge shifts to integration. How do prompt workflows fit into the rhythm of daily operations? This is where many organizations stumble, not because they lack technology, but because they haven’t thought through the process.

A healthy system follows a clear cycle: ideation, selection, execution, evaluation, and versioning. Teams capture needs, pick the right template, run the workflow, review results, and feed insights back into the library. This creates a continuous loop, much like agile development, where prompts become living assets that improve over time rather than static instructions that quickly become outdated.

The supporting technology stack usually includes a prompt management platform, version control (like GitHub or Hugging Face), and logging dashboards that track reuse, performance, and exceptions. But tools are secondary to behavior. Embedding these workflows means defining ownership, setting review cadences, and agreeing on what “good” looks like for your specific context.

And it’s urgent. A 2025 ITPro report found that enterprises face “critical shortages of staff with AI ethics and security expertise.” Without clear workflows and governance, even small prompt errors can escalate into compliance or reputational risks. What starts as an innocent productivity hack can become a data breach or bias incident if nobody’s paying attention to how prompts are being used at scale.

Metrics and KPIs That Matter

Measurement is how experimentation becomes discipline. Without the right metrics, you’re flying blind, unable to distinguish between what’s working and what’s wasting time. Track these key indicators:

Prompt reuse rate tells you how often a template gets reapplied across the organization. High reuse means you’ve created something genuinely valuable that people trust and return to. Low reuse might signal that prompts are too specific, too complex, or not solving real problems.

Error or exception rate shows how often outputs fail review or require manual correction. This is your quality signal, are your prompts producing reliable results, or are they creating more work than they save?

Business-user satisfaction captures whether the people using these tools feel they’re getting value. Technical success means nothing if end users find the process frustrating or the outputs unhelpful.

Time to value measures how quickly someone can go from having a need to getting a useful result. This speaks to the accessibility and maturity of your prompt systems.

Abandonment rate tracks prompts that fall out of use. This is just as important as adoption, it tells you what isn’t working and helps you prune your library to keep it focused and relevant.

Together, these indicators tell a story about whether your AI systems are learning with the organization or being forgotten as experiments. They show whether you’re building institutional knowledge or just accumulating technical debt.

From Hacks to Capability

We’re entering a phase where AI maturity will no longer be judged by model count or chatbot presence, but by how well an organization manages its prompts, its invisible layer of language logic. This shift represents a fundamental change in how we think about AI implementation.

The companies that win won’t just write better prompts. They’ll engineer better systems: transparent, teachable, and continuously improving. They’ll build organizations where knowledge accumulates, where learning compounds, and where AI capabilities strengthen over time rather than fragmenting into isolated experiments.

Prompt engineering began as a creative skill. It’s becoming an enterprise discipline. The next step is governance, because when every employee can direct an AI system, responsibility scales with capability. The power that comes from democratizing AI access must be matched with the structure to use that power wisely.

How the AI Readiness Hub can support you in this journey

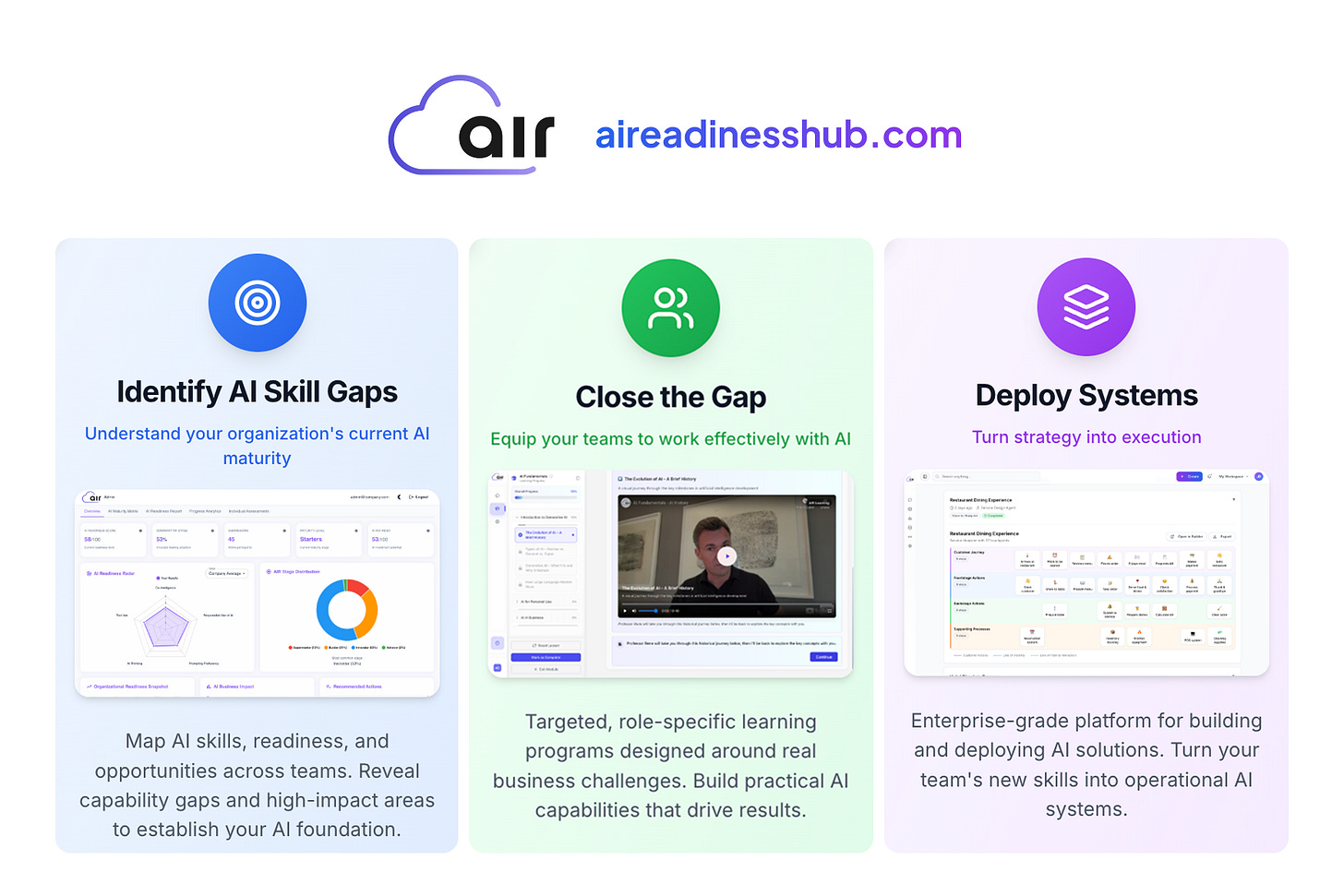

In the broader AI Capability Stack - spanning skills, systems, and culture - prompt engineering plays a foundational role. It’s the connective tissue between human intent and machine execution. When we help leaders see prompts not as hacks but as organizational capabilities, they begin to build systems that learn as fast as they do.

That’s exactly where AI Readiness Hub comes in. AIR University is the home for developing those capabilities, from assessing your organization’s current AI maturity to upskilling teams in prompt literacy, AI strategy, and responsible governance. It provides the structured learning pathways that turn scattered individual knowledge into shared organizational competence.

And once those skills are in place, AIR Hosting provides the next layer: the operational environment to deploy, manage, and scale AI agents and workflows securely across the organization. It’s where the learning becomes action, where trained teams get the infrastructure to put their skills to work.

Together, these two layers - skills and systems - turn theory into practice. They transform one-off prompt experiments into repeatable, governed, organization-wide intelligence. Because in the end, the question isn’t whether your people can prompt. It’s whether your organization can learn from every prompt it writes.

Reach out to Alex if you’d like to know more about AI Readiness Hub: alex@cerenety.com

This article comes at the perfect time, truely hitting home how quickly prompt engineering is maturing from a wild west into something structured and strategic. It makes you wonder, what if these self-governing prompt-systems become so adept that our main job as 'designers' is just to occasionally nod approvingly and try not to break anything?