EU AI Act for Startups – A Guide to Clear the Fog

TL;DR – What You Need to Know

The Act becomes mandatory in August 2026, but waiting is risky. Use this time to prepare - compliance will soon be a market access prerequisite.

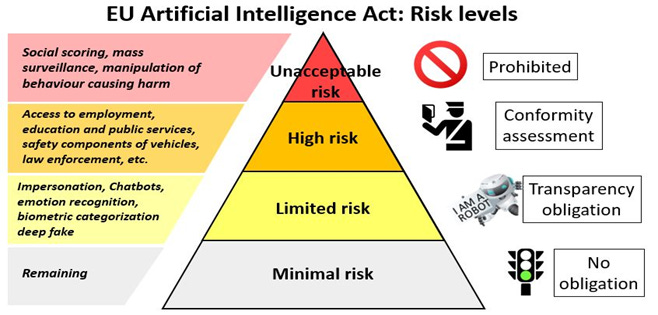

AI is classified into 4 risk levels: Minimal, Limited, High, and Unacceptable.

Most startups fall under Minimal or Limited Risk - but even these require transparency.

High-Risk systems (e.g. HR, health, finance) face strict obligations: CE marking, oversight, documentation.

Some systems - like social scoring or emotion detection in schools—are outright banned.

Explainability is not optional = and should be part of product design from day one. Don’t build a black box.

Use our AI Compliance Canvas and role-mapping guide to get ahead—compliance will be a dealbreaker.

Recently, while training AI-focused climate-tech startups from Climate KIC in the UK and Ireland, I was struck by one thing: how profoundly unclear the EU AI Act still is to the entrepreneurs who need clarity the most. These are not casual users of technology, they're innovators racing to mitigate climate change, entrepreneurs whose ideas promise tangible benefits for the planet. Yet, confusion around compliance threatens to stall their progress.

One founder summed it up bluntly: “How can we innovate when we don’t even know if our innovation will be allowed?” This newsletter is my response: clear, practical, and focused on removing the fog that surrounds Europe's landmark regulation. If your startup leverages AI (I hope it does) and you interact with the EU market, this is your guide.

🛡️ The Brussels Firewall

The AI Act is already shaping the market reality across sectors. It doesn't matter whether your developers are based in Berlin, Glasgow, or Silicon Valley. If your AI touches Europe, you must comply.

Selling predictive analytics software to a Dutch municipality? Offering an AI-driven SaaS platform accessible in France? If your algorithm guides energy consumption choices for Portuguese users, you're under EU jurisdiction. Forget geographic boundaries, in AI regulation, legal obligations travel with your product.

🧭 How We Got Here: A Quick History of the EU AI Act

When Brussels watched AI leap from research labs to everyday lives around 2018, alarm bells rang. With scandals like Cambridge Analytica fresh in mind, the EU knew it needed guardrails. Fast forward: In April 2021, the EU proposed the AI Act, Europe’s bid to balance innovation with fundamental rights. After heated debates, lobbying battles, and countless revisions, the Act passed in early 2024.

Key Milestones:

April 2021: Initial AI Act proposal

August 2024: Official entry into force

August 2026: Final deadline for compliance

With the AI Act, Europe tied regulation directly to its vision of responsible innovation. Now, let's decode it clearly for your startup.

🎨 Navigating the Risk Spectrum

The EU AI Act doesn’t regulate AI in bulk. Instead, it slices the landscape into four distinct risk levels, each with its own obligations - from light-touch transparency to outright bans. This risk-based approach is what makes the law both nuanced and powerful. It’s not about red tape; it’s about proportionality. The greater the impact on people’s lives, the tighter the rules.

So where does your startup’s AI fall? Let’s walk through the spectrum, from safe harbor (minimal risk) to forbidden zone (unacceptable risk).

✅ Minimal Risk: Safe Zone

This is the safest zone on the regulatory spectrum. If your AI tool helps users write better emails, clean up dashboards, or optimize internal workflows, congratulations: you’re operating in minimal-risk territory.

Examples include:

Productivity tools like grammar checkers or summarizers.

AI-enhanced features such as noise reduction in video calls.

Search and navigation tools guiding logistics or user journeys.

Basic analytics dashboards for internal reporting.

Automation that improves backend workflows.

Even smart toys or gadgets, as long as they don’t cross into biometric or behavioral profiling territory.

📝 What do you have to do? Nothing. There are no registration requirements, no documentation mandates, no transparency labels, no risk assessments, and no audits under the AI Act.

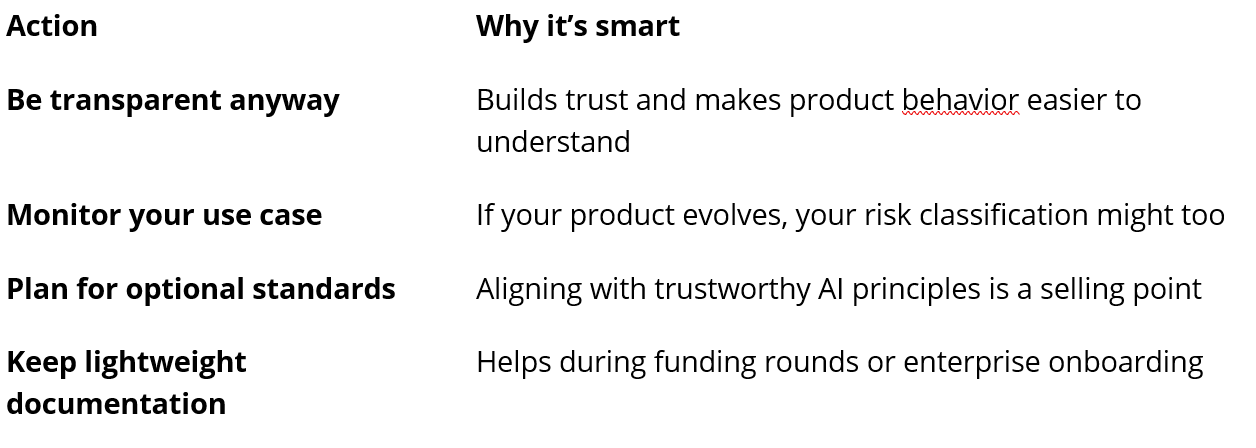

But that doesn’t mean you should ignore best practices.

💡 Strategic advice for startups:

Next: things get a little more serious. Welcome to Limited Risk.

ℹ️ Limited Risk: Transparency is Non-Negotiable

Limited-risk systems are the everyday workhorses of the AI world: chatbots, product recommenders, media suggestion engines, and content generators. Your AI system doesn’t decide who gets a loan or diagnose a disease, but they still influence behavior, and that earns them a spotlight under the EU AI Act.

If your AI tool interacts with users or produces synthetic content, this section applies to you.

📝 What do you have to do? The EU AI Act’s Article 52 outlines three core transparency requirements:

🧠 Strategic advice for startups:

Don’t hide the AI. Transparency is not just compliance, it’s branding. It says: “We have nothing to hide.”

Build in flexibility. Build toggles and disclosures into your UI from the start – it’s cheaper than retrofitting.

Avoid feature creep. Adding biometric or emotion detection could push you into high-risk, or worse, banned, territory.

Now let’s step into the big leagues: high-risk AI.

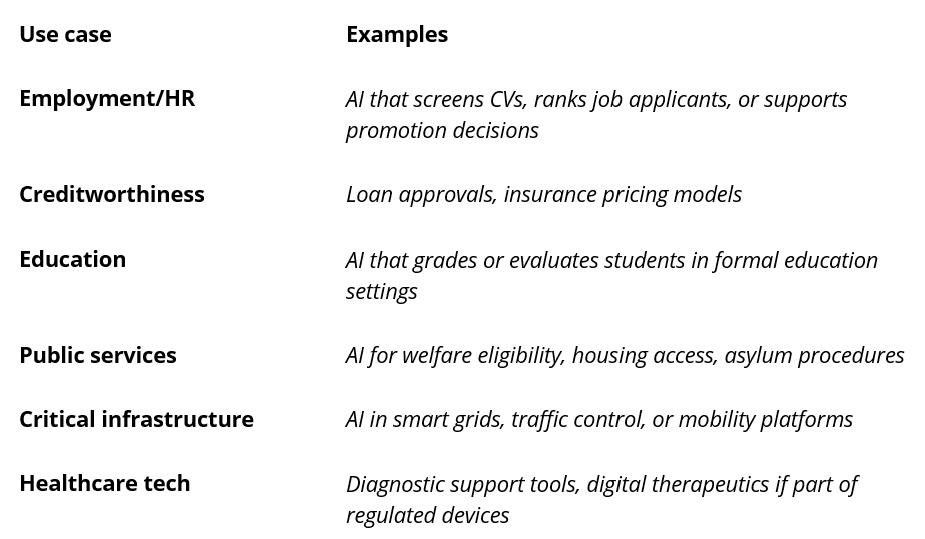

⚠️ High Risk: Serious Responsibility

AI systems here shape crucial life decisions, like educational outcomes, healthcare recommendations, financial decisions, and job opportunities. Missteps can significantly harm lives.

If your AI appears in Annex III of the EU AI Act or powers a regulated product in healthcare, finance, transport, or education, you’re in high-risk territory.

📝 What do you have to do? This is the compliance heavy-lifting zone. Here’s what’s expected, and how to approach it smartly. By the way, a distinct requirement is the conformity assessment!

🧠 Strategic advice for startups:

Compliance can be a competitive edge: Being AI Act–ready builds trust with EU clients, especially enterprise buyers or in regulated sectors.

Don’t wait for the deadline: Some requirements (like bias testing and human oversight) take time to build properly—start now.

Build lean: You can meet many of the requirements using open standards (like ISO/IEC 42001, model cards, datasheets for datasets).

Map roles carefully: If you’re a startup building the model, you are the provider. Your EU customer is the deployer. Some duties are shared (e.g., post-market monitoring), so contracts should allocate them clearly.

Last stop: the red zone. Let’s talk about the AI systems you can’t build.

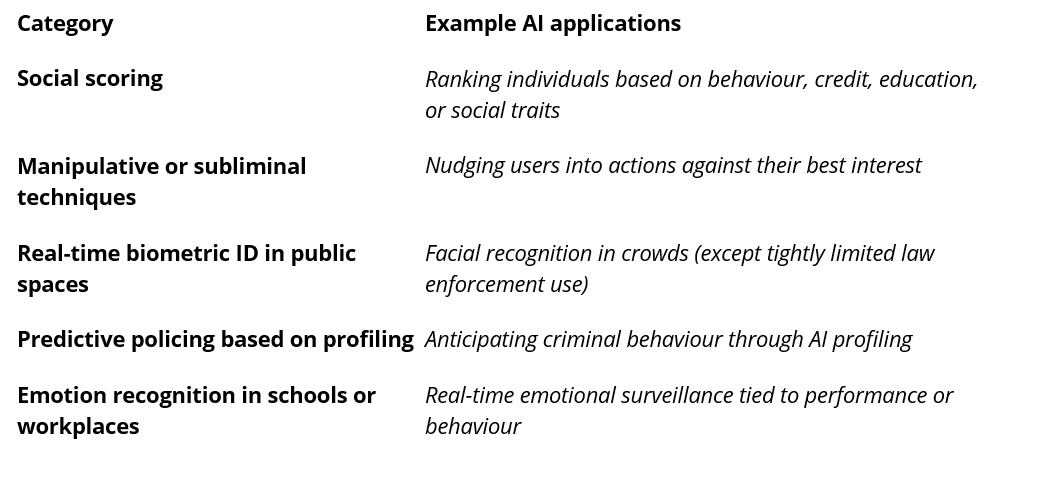

❌ Unacceptable Risk: Forbidden Zones

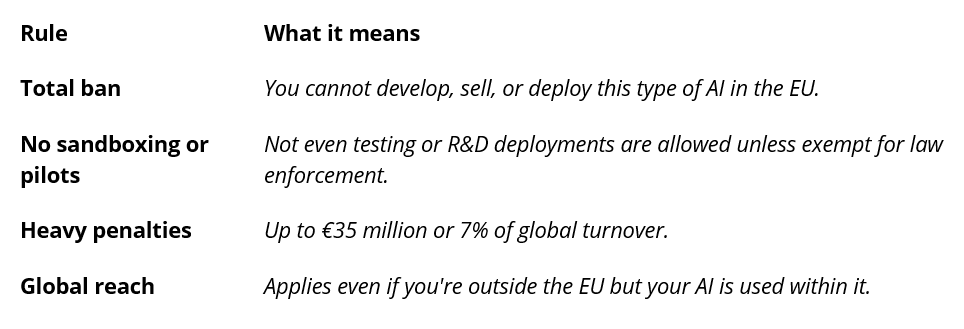

The EU AI Act draws a hard line in the sand. Certain AI systems are banned entirely. Not regulated. Not sandboxed. Banned.

If your product involves manipulation, surveillance, or profiling that undermines fundamental rights, you’re not facing regulation, you’re facing prohibition.

📌 Examples of banned systems:

🛑 What does this mean?

🧠 Strategic advice for startups:

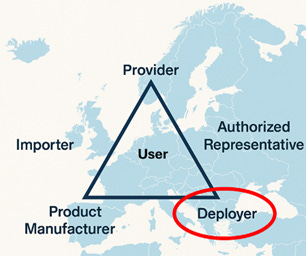

🧮 From Checklist to Compass: How to Classify Your AI System

By now, you’ve seen the four risk levels: Minimal, Limited, High, and Unacceptable. But how do you know which one your AI system falls into?

The EU AI Act offers a structured lens, and we've simplified it into a decision matrix and positioning map.

Here’s how to navigate it:

Now, match your AI system across the rows. The rightmost column that applies determines your risk category. Still unsure? Then default to the stricter category and work backward. It's cheaper to build with compliance than to retrofit it.

To help visualize this, imagine a 2x2 map:

🏋️♀️ Case Study: When AI Tells You to Rest

Imagine you're building an AI-powered fitness coach. Your app uses wearable data, heart rate, sleep, and recovery to recommend workouts. One morning, the user opens the app, and it says: “Delay your next intense workout.”

Helpful? Yes. Trusted? Not necessarily.

This is where explainability becomes critical—even for a limited-risk AI system.

🚧 Risks

Even for a limited-risk AI system, pitfalls can emerge quickly. If users don’t understand why they’re being told to skip a workout, they’re likely to dismiss the recommendation. Over time, this erodes trust and user retention. Worse, it invites regulatory scrutiny. Authorities may ask: How is the system personalizing its advice? Is the process fair, accountable, and safe?

🛠 Solutions

To avoid these traps, startups need to make their AI’s logic transparent. Tools like SHAP or LIME can help unpack the decision process. For example, the system might reveal: “Today’s recommendation is based on 50% elevated heart rate variability, 30% poor sleep quality, and 20% missed recovery goals.”

But even more important is how that information is delivered. Translate the data into user-friendly language: “We noticed poor sleep quality over the last three nights. To prevent overtraining, we suggest a lighter session today.”

Finally, build in a human-in-the-loop. For instance, a certified fitness coach could review the weekly output of the AI system to ensure that it aligns with best practices and personal safety.

📁 Documenting Best Practices:

Good documentation isn’t just for auditors, it’s for your future self, your investors, your compliance team, and ultimately, your users. The more consequential your AI becomes, the more critical it is to track what it does, how it decides, and how those decisions evolve.

Even for a limited-risk system, building lightweight but disciplined documentation habits sets your startup apart. Here’s what that looks like in practice:

Model Card (1-page summary):

• Inputs (e.g., heart rate, recovery score), prediction goal, and system limitations

• Explanation method used (e.g., SHAP)

• Frequency of model reviews by experts

Decision Log:

• Record for each recommendation: inputs, rationale, timestamp, and user action

Internal Risk Register:

• Risks: misleading health advice, over-reliance, liability

• Mitigations: clear explainability layer, human-in-the-loop, opt-out options

• Owner: e.g., Head of Product or Compliance Officer

So, whether your AI recommends kettlebells or career paths, make sure your user knows why.

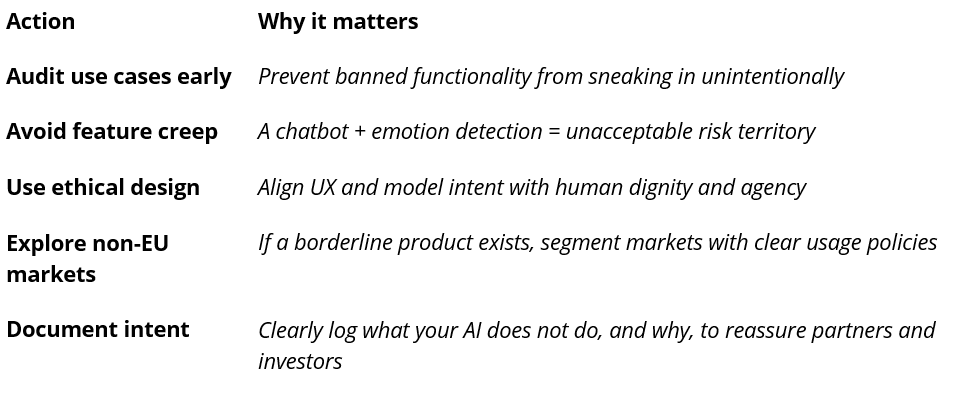

🧭 Know Your Role: Who Does What Under the EU AI Act

The EU AI Act doesn’t just classify systems by risk, it also defines who’s responsible for what. And if you're a startup, you might be wearing more than one hat.

Let’s simplify the triangle:

Providers build the AI system or model.

Product Manufacturers embed that system into a device or branded product.

Deployers use the AI in a real-world context.

End Users interact with the AI output but are not regulated.

Authorized Representatives & Importers ensure obligations are fulfilled when companies operate from outside the EU.

Let’s break it down using the AI-powered fitness coach app we introduced earlier:

If you’re building, branding, and operating your own app—congratulations, you're the provider, manufacturer, and deployer. That means you shoulder the bulk of compliance responsibilities across the lifecycle. With that clarified, let’s take a step back and see how Europe’s approach stacks up globally.

🌍 Global AI Regulation Snapshot

AI is global, but the rules aren’t. While Europe builds a regulatory framework rooted in human rights, other regions are taking markedly different paths. Here’s a look at how the three major powers are shaping AI governance:

United States: Innovation First, Rules Later

The U.S. takes a decentralized, sector-specific approach. There’s no overarching federal AI law. Instead, the White House has leaned on executive orders and voluntary commitments. Regulators like the FTC and FDA weigh in based on context. State laws (like California’s) are filling the void. AI development remains fast and founder-driven, but the lack of consistent rules raises risks around data use, bias, and safety.

China: Centralized and Strategic

China moves fast, and with top-down coordination. Its AI governance began with broad ethical guidelines, but it has since evolved into binding rules. The government requires security reviews, algorithmic registration, and pre-approval for certain applications, especially those involving public opinion or youth. Regulation here is not only about safety, it’s about political stability and competitive advantage.

Europe: Risk-Based and Rights-Driven

Europe leads in normative frameworks. The EU AI Act is a single regulation with extraterritorial scope and layered obligations based on risk. It's enforceable, harmonized, and deeply influenced by GDPR-style thinking: transparency, accountability, and fairness come first. Startups may grumble, but Europe’s clarity is fast becoming the global benchmark.

“Europe doesn’t just regulate AI, it defines what trustworthy AI looks like.”

If your startup wants to scale across markets, understanding these divergent regulatory logics can give you a strategic edge. Now let’s talk about what this means for collaboration, especially between startups and corporates. Because here’s the kicker: regulation doesn’t just shape markets—it reshapes partnerships.

🤝 Why Corporates Fear Startups (And How to Fix It)

Corporates love the idea of working with startups. Fast innovation, fresh thinking, niche expertise. But when it comes to AI, especially in the EU AI Act context, excitement often turns to hesitation. Why? Because regulation raises the stakes, and startups often don’t look ready to carry the weight. Here’s what’s really holding corporates back (based on a conversation I recently had with corporate leaders):

1. Regulatory Risk Transfer

Under the EU AI Act, deploying a high-risk AI system, no matter who built it, means shared legal responsibility. If a startup doesn’t comply, the corporate partner can still get fined. Heavily.

2. Immaturity of Internal Processes

Startups move fast, but often at the expense of documentation, testing protocols, or incident response systems. That makes due diligence hard and integration risky.

3. Security and IP Concerns

Unsecured APIs, open-source dependencies, and unclear IP rights can scare corporates off. Especially when AI models are trained on third-party or proprietary data.

4. Procurement Friction

Startups can’t meet the heavyweight procurement requirements of corporates, ISO certifications, insurance, ESG scores, etc. By the time contracts are ready, the startup may have pivoted.

5. Reputational Risk[MOU1]

If a biased AI tool hits the news, it’s not the unknown startup that gets dragged, it’s the corporate brand that bought it.

💡 The Fix: Build Trust Into the Stack

If you’re a startup, you can close the credibility gap by:

Documenting your development process (training data, model versions, risk mitigation)

Aligning with GDPR and AI Act requirements proactively (see the guidance above)

Creating a sandbox or pilot that includes a transparency and oversight layer

Being upfront about limitations and roadmap fixes

Signing shared accountability agreements that clarify roles and responsibilities

If you’re a corporate, you can help by:

Creating lightweight onboarding tracks for startups

Providing AI compliance toolkits instead of expecting ISO maturity on day one

Appointing internal champions to de-risk and steward the partnership

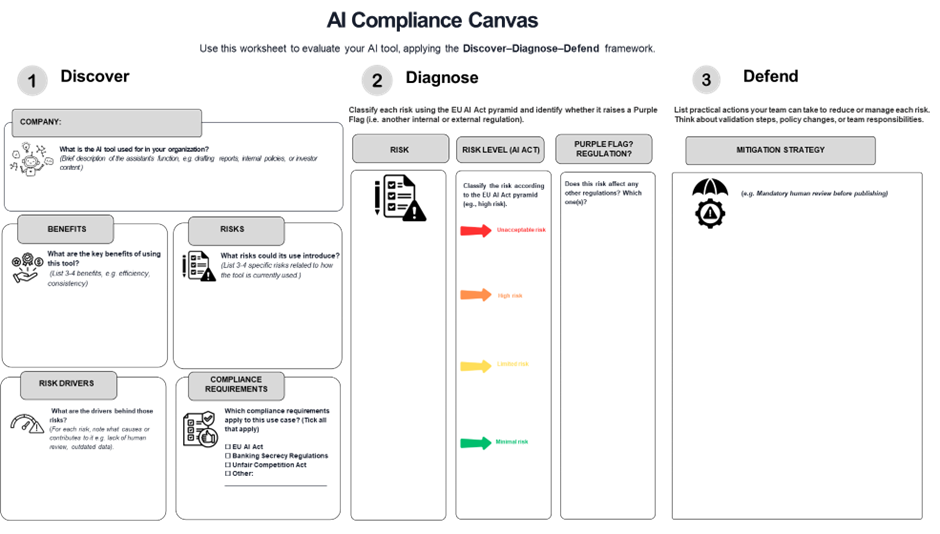

🧰 The AI Compliance Canvas: One Page to Rule Your Risk

To wrap it all up, here’s a hands-on tool to help you operationalize everything you’ve just read. The AI Compliance Canvas is a simple, strategic worksheet designed for busy founders, product teams, and legal leads.

It follows the Discover – Diagnose – Defend framework and lets you evaluate any AI system in three clear steps:

🕵️♀️ Step 1: Discover

Clarify what your AI tool does, why it exists, and what benefits it brings. Write out the risks it could introduce and what drives those risks (e.g., outdated training data, lack of human oversight). Then list which regulations apply to your use case: AI Act? GDPR? Banking rules?

🩺 Step 2: Diagnose

Classify each risk using the EU AI Act’s pyramid (Minimal, Limited, High, Unacceptable). Flag any risks that raise issues with other regulations —those are your “Purple Flags.” This helps you see overlaps between AI, data protection, financial law, and more.

🛡 Step 3: Defend

List practical mitigation strategies. Do you need a human-in-the-loop? A new documentation protocol? More training for your team? This is where compliance moves out of the legal silo and into the design process.

How you can use this canvas:

As a team exercise during product reviews

In board meetings when aligning risk with roadmap

During investor due diligence to demonstrate readiness

“If your product team can’t fill out this canvas, you’re not ready to ship to Europe.”

Want the editable version? Send me a DM and I’ll send you the template.

And with that, you’ve got the map, the gear, and the guide. Now go build boldly and responsibly.

Acknowledgement: A big thanks to Mickie De Wet for the sharp feedback on both content and tone - and for co-developing the Discover–Diagnose–Defend framework and the AI Compliance Canvas with me. It’s been a real pleasure shaping this together. Your clarity and critical eye made this better!

Update: Possible Delay Ahead - The European Commission is reportedly considering delaying the AI Act’s application - mainly due to tensions around the GenAI code of practice and delayed technical standards. But don’t slow down. This newsletter still matters: use the extra time to clarify your risk level, document your AI systems, and build transparency into your product. See Marie Potel-Saville post for this important development here.

Originally published on May 30, 2025 (content not updated).