Context Engineering: The Next Frontier of AI Agents

Why designing what your AI sees matters more than clever prompts

TL;DR

More context isn’t always better: excessive input causes attention budget limits and context rot, reducing accuracy by up to 30%.

Effective context engineering principles include: modular prompts, clear tool contracts, dynamic retrieval, memory layering, and sub-agent orchestration.

Case study: Context engineering improved legal AI assistant accuracy by 27% while cutting costs by 40%.

For leaders, this isn’t just technical, it’s about risk management, cost efficiency, and capability scaling.

Bigger context windows make engineering more important, not less. Selective filtering and standard protocols will define the future.

Strategic question for leaders: “What should my AI actually see and what must I keep out of view?”

From Prompts to Context: A Paradigm Shift

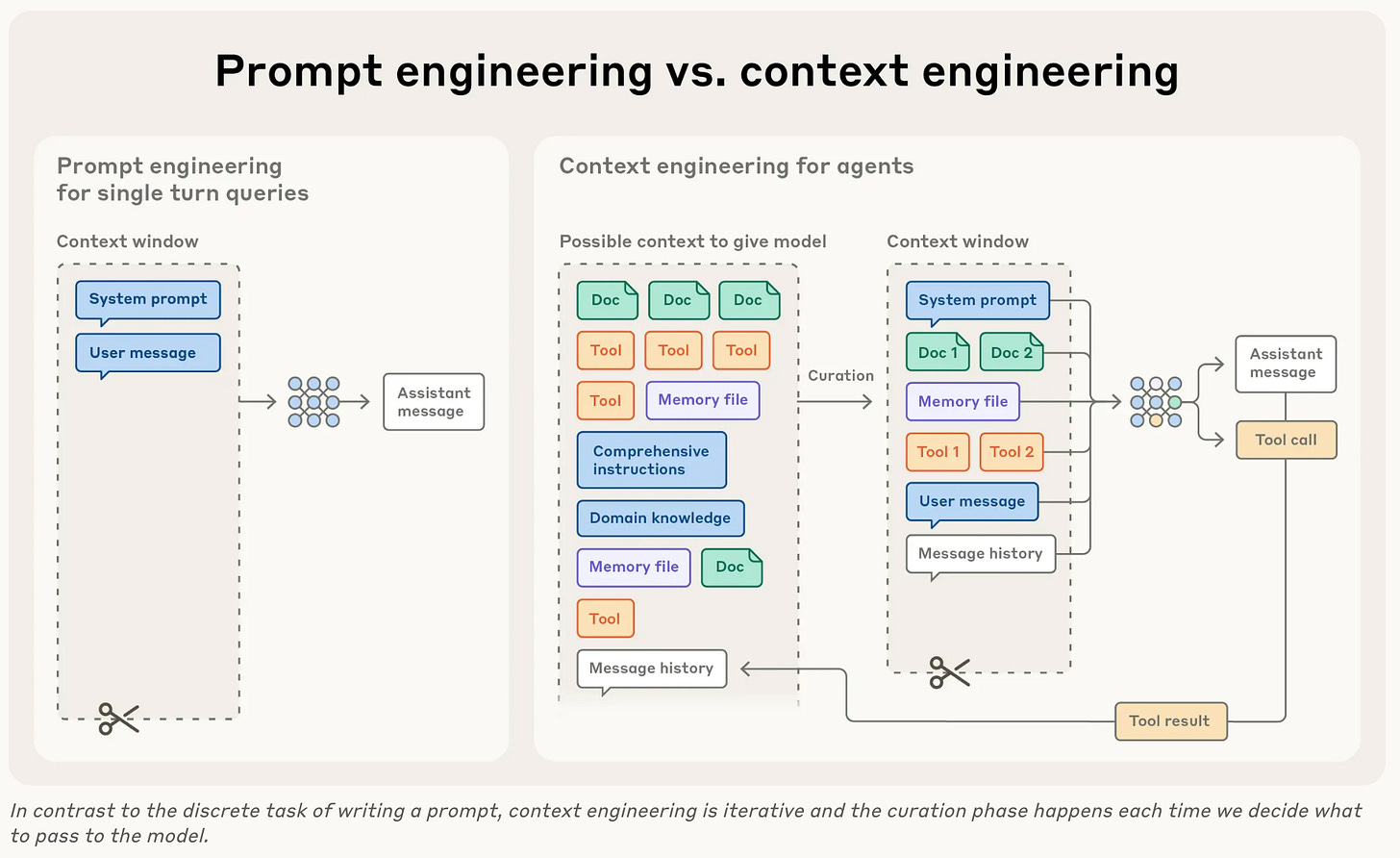

For two years, much of the AI world has obsessed over prompt engineering. Crafting the “perfect” instruction became an industry in itself. But as large language models (LLMs) power more sophisticated agents - copilots, research assistants, autonomous planners - it’s becoming clear: the real challenge isn’t the prompt. It’s the context.

Context is everything the model “sees”:

System prompt (role and constraints)

Conversation history

Retrieved documents

Tool specifications

Examples and instructions

Anthropic calls this effective context engineering. Their research* shows it will define the next generation of reliable AI agents.

Why More Context Can Make AI Worse

It feels intuitive: if models thrive on information, why not give them everything? All the documents, all the history, all the instructions.

But Anthropic highlights why this fails:

Attention Budget: LLMs don’t weigh tokens equally. Like humans with limited working memory, they prioritize recent or salient information. Important details get diluted in long inputs.

Context Rot: In very long contexts, accuracy degrades. Models hallucinate, contradict themselves, and miss key instructions.

When Anthropic supplied excessive history without pruning, model accuracy on retrieval tasks dropped by 30% compared to a context-engineered baseline.

Think of reading 100 pages of meeting notes before making a decision. The more you keep in mind, the more likely you are to miss the one line that actually matters.

Key takeaway: More isn’t better. Smarter context curation is.

Principles of Context Engineering

Anthropic outlines practical methods that anyone deploying AI agents should adopt:

Modularize the Prompt

Break agent context into sections, this avoids overlap and makes updating easier.

Background: role and rules of the agent

Tools: APIs and instructions for their use

Examples: demonstrations of correct outputs

Constraints: formatting, tone, or compliance rules

Define Tool Contracts

Ambiguity is costly. Clear rules like “The calendar_search tool only accepts YYYY-MM-DD” reduce misuse.Use Dynamic Retrieval

Instead of loading everything upfront, use just-in-time context. Fetch the right knowledge when needed, through retrieval-augmented generation (RAG) or database queries.Build Memory Architecture

Agents need layers of memory: summaries for short-term, notes for medium-term, and databases for long-term. This keeps context lean while preserving continuity.Split Into Sub-Agents

Different tasks demand specialized agents. Research, drafting, scheduling, each performs better with its own tailored context, coordinated by orchestration.

Case in Point: Legal Research Assistant

Imagine an AI assistant tasked to: “Summarize all recent EU data privacy rulings relevant to fintech startups.”

Naïve approach: dump 200 pages of legal text into the model. The result? Expensive, slow, vague.

Context-engineered approach:

Retrieve 10 most relevant cases with vector search

Summarize each into structured notes

Maintain a running “case matrix” memory

Feed compacted notes for final synthesis

Anthropic’s benchmarks show that with summarization + selective retrieval, accuracy jumped by 27% while token usage (and cost) dropped by over 40%.

This isn’t just efficiency. It’s reliability. The assistant now delivers actionable insights instead of a wall of text.

The Strategic Lens: Why Leaders Should Care

For executives and strategists, context engineering isn’t just a technical detail, it’s a governance question.

Risk: Poor context design can cause misinterpretations, compliance failures, or exposure of sensitive data.

Cost: Token usage scales linearly with input length. Inefficient design can bloat AI budgets.

Capability: Smart context engineering yields more accurate, explainable, and scalable agents.

Leaders don’t need to master the implementation details. But they must ask the right questions:

What’s in the agent’s context?

How do we prune or retrieve effectively?

How do we monitor “context rot”?

The Future of Context Engineering

Anthropic predicts context windows will continue to grow (we already see 200k+ tokens). But paradoxically, this makes context engineering more important, not less.

Because the bigger the window, the easier it is to overload it, and the more crucial selective filtering becomes.

Expect three developments:

Native summarization & compaction tools built into LLM platforms.

Standard protocols for context exchange (like the Model Context Protocol).

Hybrid systems combining human oversight, AI retrieval, and specialized sub-agents.

Closing Reflection

Prompt engineering gave us parlor tricks. Context engineering will give us durable agents.

It’s the difference between a chatbot that drowns in information and one that delivers clarity. Between a compliance risk and a trustworthy assistant. Between experimental pilots and scalable AI adoption.

For leaders: don’t ask “What’s the right prompt?” Ask instead:

“What does my AI actually need to see, and what must I keep out of view?”

*Anthropic’s Report: Effective context engineering for AI agents, Sept 29th, 2025